#include <fixed_pool.h>

Public Types | |

| enum | { kNodeSize = nodeSize , kNodeCount = nodeCount , kNodesSize = nodeCount * nodeSize , kBufferSize = kNodesSize + ((nodeAlignment > 1) ? nodeSize-1 : 0) + nodeAlignmentOffset , kNodeAlignment = nodeAlignment , kNodeAlignmentOffset = nodeAlignmentOffset } |

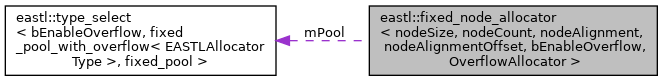

| typedef type_select< bEnableOverflow, fixed_pool_with_overflow< OverflowAllocator >, fixed_pool >::type | pool_type |

| typedef fixed_node_allocator< nodeSize, nodeCount, nodeAlignment, nodeAlignmentOffset, bEnableOverflow, OverflowAllocator > | this_type |

| typedef OverflowAllocator | overflow_allocator_type |

Public Member Functions | |

| fixed_node_allocator (void *pNodeBuffer) | |

| fixed_node_allocator (void *pNodeBuffer, const overflow_allocator_type &allocator) | |

| fixed_node_allocator (const this_type &x) | |

| this_type & | operator= (const this_type &x) |

| void * | allocate (size_t n, int=0) |

| void * | allocate (size_t n, size_t alignment, size_t offset, int=0) |

| void | deallocate (void *p, size_t) |

| bool | can_allocate () const |

| void | reset (void *pNodeBuffer) |

| const char * | get_name () const |

| void | set_name (const char *pName) |

| const overflow_allocator_type & | get_overflow_allocator () const EA_NOEXCEPT |

| overflow_allocator_type & | get_overflow_allocator () EA_NOEXCEPT |

| void | set_overflow_allocator (const overflow_allocator_type &allocator) |

| void | copy_overflow_allocator (const this_type &x) |

Public Attributes | |

| pool_type | mPool |

Detailed Description

template<size_t nodeSize, size_t nodeCount, size_t nodeAlignment, size_t nodeAlignmentOffset, bool bEnableOverflow, typename OverflowAllocator = EASTLAllocatorType>

class eastl::fixed_node_allocator< nodeSize, nodeCount, nodeAlignment, nodeAlignmentOffset, bEnableOverflow, OverflowAllocator >

Note: This class was previously named fixed_node_pool, but was changed because this name was inconsistent with the other allocators here which ended with _allocator.

Implements a fixed_pool with a given node count, alignment, and alignment offset. fixed_node_allocator is like fixed_pool except it is templated on the node type instead of being a generic allocator. All it does is pass allocations through to the fixed_pool base. This functionality is separate from fixed_pool because there are other uses for fixed_pool.

We template on kNodeSize instead of node_type because the former allows for the two different node_types of the same size to use the same template implementation.

Template parameters: nodeSize The size of the object to allocate. nodeCount The number of objects the pool contains. nodeAlignment The alignment of the objects to allocate. nodeAlignmentOffset The alignment offset of the objects to allocate. bEnableOverflow Whether or not we should use the overflow heap if our object pool is exhausted. OverflowAllocator Overflow allocator, which is only used if bEnableOverflow == true. Defaults to the global heap.

Constructor & Destructor Documentation

◆ fixed_node_allocator()

|

inline |

Note that we are copying x.mpHead to our own fixed_pool. This at first may seem broken, as fixed pools cannot take over ownership of other fixed pools' memory. However, we declare that this copy ctor can only ever be safely called when the user has intentionally pre-seeded the source with the destination pointer. This is somewhat playing with fire, but it allows us to get around chicken-and-egg problems with containers being their own allocators, without incurring any memory costs or extra code costs. There's another reason for this: we very strongly want to avoid full copying of instances of fixed_pool around, especially via the stack. Larger pools won't even be able to fit on many machine's stacks. So this solution is also a mechanism to prevent that situation from existing and being used. Perhaps some day we'll find a more elegant yet costless way around this.

Member Function Documentation

◆ can_allocate()

|

inline |

can_allocate

Returns true if there are any free links.

◆ reset()

|

inline |

reset

This function unilaterally resets the fixed pool back to a newly initialized state. This is useful for using in tandem with container reset functionality.

The documentation for this class was generated from the following file:

- third_party/EASTL/include/EASTL/internal/fixed_pool.h